Introduction: Data to AI, as Oil to Industry – A Vast Potential Awaiting Extraction

In the surge of artificial intelligence (AI), data is likened to the fuel propelling progress. Professor Fei-Fei Li’s recent remark that “there is no shortage of AI training data; vast amounts in vertical domains remain unexplored” spotlights a new frontier for AI development and poses a contemporary question: How can we efficiently and compliantly unlock the potential within these vertical data realms? This article, centered around “Vertical AI Data Mining,” delves into the current state, challenges, and solutions of this domain, introducing Pangolin Scrape API, an innovative tool enhancing data extraction precision and efficiency in the industry.

The State of Vertical Data: Uncharted Digital Treasures

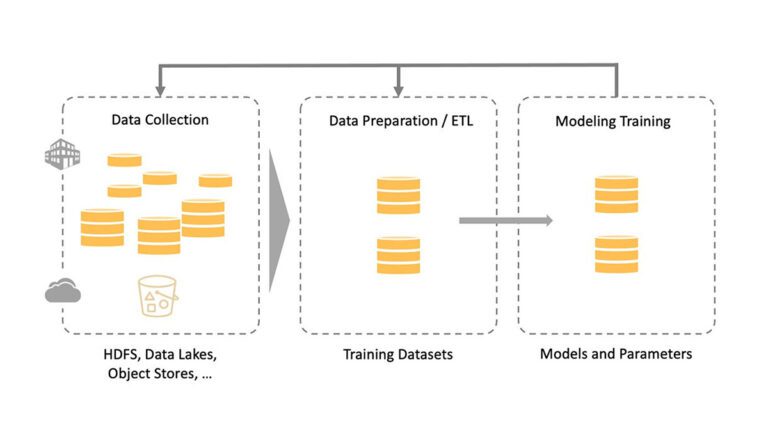

Within sectors such as finance, healthcare, education, and agriculture, immense datasets resemble buried gold mines, ripe for exploitation yet largely untapped. These data, imbued with sector-specific insights, are pivotal for enhancing AI models’ industry adaptability and accuracy. However, their utilization is often hindered by data silos, inconsistent formats, and high barriers to access.

Challenge Front: The Three Hurdles of Vertical Data Mining

- Data Siloes and Integration Issues – Diverse standards across verticals create isolated data pools, demanding costly consolidation efforts.

- Legal and Privacy Protections – Regulations like GDPR and the Personal Information Protection Law pose stringent restrictions on data collection and usage, making lawful acquisition a major obstacle.

- Technology and Tool Selection – The complexity of sector-specific data necessitates highly customized extraction and processing technologies, emphasizing the importance of choosing the right tools.

Solutions: Charting a Course Through the Ice, Combining Tech and Strategy

- Establishing Industry Data Sharing Mechanisms – Encouraging collaboration among associations, governments, and enterprises to set unified standards and facilitate data exchange.

- Strengthening Compliance Frameworks – Developing data handling processes in line with international and domestic legal requirements, ensuring the legality of data gathering, storage, and use.

- Introducing Smart Scraping Tools: Pangolin Scrape API – Tailored to the needs of vertical data extraction, Pangolin Scrape API stands out with its efficiency, compatibility, and intelligent features. It supports customizable crawler configurations, extracts structured data intelligently, and boasts robust data cleaning capabilities, effectively mitigating legal risks while ensuring data quality.

Pangolin Scrape API: Setting New Standards in Data Extraction

- Key Features:

- Adaptive Learning Engine – Automatically adjusts to different website structures, minimizing manual intervention.

- Advanced Data Parsing – Handles complex page structures, extracting unstructured data.

- Security and Compliance Assurance – Integrated compliance checks prevent legal breaches.

- Efficient Data Delivery – Real-time data push, seamlessly integrating with enterprise databases.

- Industry Application Cases – Highlighting real-world scenarios where Pangolin Scrape API has successfully been implemented in sectors such as healthcare and fintech, maximizing data value.

Conclusion: The Future Outlook for Data Mining – From Quantity to Quality

With advancing technology and deepening industry cooperation, the mining of vertical data will progressively dismantle barriers, facilitating the leap from data accumulation to intelligent application. The future of AI promises greater precision and personalization, all rooted in the thorough exploration and effective utilization of these “unexploited” datasets. Tools like Pangolin Scrape API are accelerating this process, fostering a seamless integration of AI with vertical sectors and ushering in an era of data-driven smart innovation.