Introduction

In today’s information explosion era, data has become a critical basis for business decision-making. However, effectively acquiring and utilizing massive amounts of data poses a significant challenge for companies. Automated data scrapers have emerged as a powerful tool to address this problem. This article will delve into the importance and development background of automated data scrapers, their application scenarios, technical challenges, and will particularly introduce a new product—Pangolin Scraper, analyzing its market prospects and challenges.

Part One: Overview of Automated Data Scrapers

Definition and Classification

Definition of Automated Data Scrapers

Automated data scrapers refer to tools that use computer programs to automatically retrieve information from the internet or other data sources. These tools simulate human browsing of web pages, calling API interfaces, and other methods to automatically collect and organize data, greatly improving the efficiency and accuracy of data acquisition.

Introduction to Different Types of Automated Data Scrapers

Automated data scrapers can be classified into the following categories based on their working methods and application scenarios:

- Web Crawlers: Web crawlers are automated programs that systematically browse the internet and capture web content. These tools are typically used in search engine indexing, content aggregation, and market analysis.

- API Scrapers: API scrapers retrieve structured data by calling public or private application programming interfaces (APIs). This method is commonly used for collecting social media data, financial data, etc.

- Screen Scraping Tools: These tools directly scrape data from user interfaces, suitable for scenarios where data cannot be obtained through crawlers or APIs.

Main Functions

Data Scraping

Data scraping is the core function of automated data scrapers. By simulating user behavior, automated scrapers can systematically access target websites or data sources to obtain the required information.

Data Cleaning

The data obtained is often messy and contains a lot of useless information. The data cleaning function can automatically filter and organize the data to ensure the quality of the final data.

Data Storage

To facilitate subsequent analysis, the collected data needs to be properly stored. Automated data scrapers typically support storing data in local files, databases, or cloud storage.

Technical Foundation

Web Crawler Technology

Web crawler technology is the cornerstone of automated data scrapers. By simulating browser behavior, crawlers can automatically access web pages, parse HTML structures, and extract useful information. Modern crawlers usually adopt multi-threaded or distributed architectures to improve scraping efficiency.

Natural Language Processing

Natural Language Processing (NLP) technology is used to understand and process text data. With NLP technology, automated data scrapers can extract key information from unstructured data, such as sentiment analysis, keyword extraction, and more.

Machine Learning

Machine learning plays a crucial role in data cleaning and analysis. By training models, automated data scrapers can automatically identify and filter out noise data, enhancing the accuracy and usability of the data.

Part Two: Application Scenarios of Automated Data Scrapers

Market Analysis

Competitor Analysis

Automated data scrapers can help businesses monitor competitors’ dynamics, including product information, price changes, promotional activities, etc. By regularly scraping and analyzing this data, companies can timely adjust their market strategies.

Price Trend Monitoring

Price is a key factor in market competition. Automated data scrapers enable businesses to monitor market price trends in real-time, promptly identifying price fluctuations and market opportunities.

Social Media Monitoring

Brand Reputation Management

In the age of social media, brand reputation has a significant impact on companies. Automated data scrapers can monitor brand-related information on social media in real-time, helping companies identify and respond to potential reputation risks.

Public Opinion Monitoring

Public opinion monitoring is a crucial means to understand public views and market trends. Automated data scrapers allow companies to acquire and analyze public opinion information on social media in real-time, gaining insights into market movements.

Customer Relationship Management

Customer Data Collection

Customers are the most valuable assets of a company. Automated data scrapers can help businesses collect customer data from various channels, understanding customer needs and behavior, and improving customer satisfaction.

Personalized Marketing

By analyzing customer data, companies can develop personalized marketing strategies. Automated data scrapers can help businesses acquire and update customer information in real-time, optimizing marketing effectiveness.

Supply Chain Management

Inventory Monitoring

Automated data scrapers can monitor inventory levels in real-time, helping companies optimize inventory management and avoid the costs and risks associated with excessive or insufficient inventory.

Logistics Tracking

Logistics tracking is a crucial aspect of supply chain management. Automated data scrapers enable companies to obtain logistics information in real-time, improving logistics efficiency and ensuring products reach customers promptly.

Part Three: Limitations and Challenges of Automated Data Scrapers

Technical Challenges

Anti-Scraping Mechanisms

As automated data scraping technology becomes more prevalent, more and more websites have implemented anti-scraping mechanisms. These mechanisms block automated tools by detecting abnormal traffic and setting access restrictions.

Data Quality Control

Data quality is the foundation of data analysis. Automated data scrapers may encounter issues such as inconsistent data formats and data loss during scraping, affecting the final data quality.

Legal and Ethical Issues

Data Privacy

With increasingly strict data privacy regulations, companies must comply with relevant laws to protect user privacy and avoid legal risks when using automated data scrapers.

Copyright Issues

Many data sources are protected by copyright. Unauthorized automated data scraping may infringe on the copyright of data sources, leading to legal disputes.

Resource Limitations

Hardware Resources

Automated data scraping requires significant computational resources and storage space. For small businesses, hardware resource limitations may become a bottleneck.

Maintenance Costs

Maintaining automated data scrapers requires professional technical personnel and continuous investment. As data sources continually change, scrapers need constant updates and optimization, increasing maintenance costs.

Part Four: Introduction to Pangolin Scraper

Product Overview

Definition and Features of Pangolin Scraper

Pangolin Scraper is an emerging automated data scraping tool focused on providing efficient and user-friendly data scraping solutions. Its features include efficient data scraping capabilities, an intuitive interface, and a design that requires no complex configurations.

Core Functions

One-Click Scraping of Amazon Site Data

Pangolin Scraper supports one-click scraping of various data from Amazon sites, including product information, prices, reviews, and more, helping businesses quickly obtain market information.

Specified ZIP Code Data Scraping

Users can specify ZIP code areas, and Pangolin Scraper will automatically scrape relevant data for that area, facilitating regional market analysis.

Real-Time Scraping of Amazon SP Ads

Pangolin Scraper can scrape real-time data of Amazon SP ads, helping businesses monitor ad performance and optimize ad placement strategies.

Technical Advantages

No Complex Configuration Needed

Pangolin Scraper is designed to be simple, allowing users to start data scraping without complex configurations, significantly lowering the usage threshold.

User-Friendly Interface

Pangolin Scraper offers an intuitive and user-friendly interface, enabling users to easily set up scraping tasks and view scraping results.

Efficient Data Scraping Capabilities

Thanks to its advanced technical architecture, Pangolin Scraper boasts efficient data scraping capabilities, able to quickly and stably acquire large volumes of data.

Use Cases

Showcasing Pangolin Scraper’s Application Examples in Different Scenarios

Pangolin Scraper is widely used in market analysis, social media monitoring, customer relationship management, and other scenarios. Through specific use cases, its powerful features and flexible applications can be more intuitively demonstrated.

Part Five: Market Prospects and Challenges of Pangolin Scraper

Market Demand Analysis

Industry Demand Growth

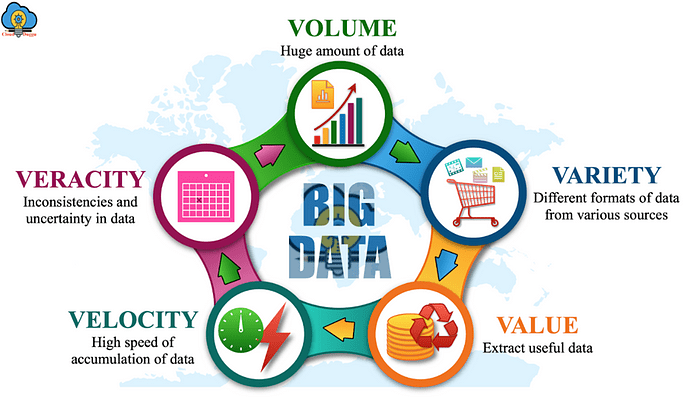

With the advent of the big data era, the demand for automated data scraping tools is continuously growing. Various industries require efficient data scraping tools to support business decisions, and Pangolin Scraper is an excellent solution to meet this demand.

Potential User Groups

Potential users of Pangolin Scraper include e-commerce businesses, market research institutions, brand management companies, and more. These users have a strong demand for efficient and reliable data scraping tools.

Competition Analysis

Comparison with Existing Automated Data Scrapers

Compared to traditional data scrapers, Pangolin Scraper has significant advantages in ease of use, efficiency, and rich functionality. By comparing it with existing products, Pangolin Scraper’s competitiveness can be better highlighted.

Market Positioning

Pangolin Scraper is positioned in the mid-to-high-end market, primarily targeting businesses and institutions with high data scraping requirements. Through precise market positioning, Pangolin Scraper can better meet user needs and expand market share.

Future Development

Technical Upgrades

As technology continues to evolve, Pangolin Scraper will continuously upgrade its technology to improve data scraping efficiency and accuracy, maintaining a leading technological position.

Function Expansion

In the future, Pangolin Scraper will continue to expand its functions, adding support for more data sources and analytical tools to meet the ever-changing needs of users.

Conclusion

Automated data scrapers play an increasingly important role in modern business. By efficiently and accurately acquiring data, companies can make better decisions and enhance competitiveness. Pangolin Scraper, as an emerging automated data scraping tool, demonstrates tremendous market potential and value with its powerful features and user-friendly design. We encourage readers to try Pangolin Scraper and experience the convenience and efficiency it brings. If you are interested in Pangolin Scraper, please contact us for more information and trial links.

In the future, Pangolin Scraper will continue to innovate, providing users with better services. We look forward to Pangolin Scraper becoming your reliable assistant and helping your business thrive.