Introduction

The Importance and Application of Amazon Best Sellers Data

Amazon Best Sellers data represents the most popular products in the market. For e-commerce analysts and market researchers, this data provides valuable insights. By analyzing best sellers data, one can understand current market trends, consumer preferences, and the characteristics of popular products.

The Role of Data Scraping in E-Commerce Analysis and Market Research

Data scraping is crucial in e-commerce analysis and market research. By collecting and analyzing data, businesses can monitor competitors’ actions, formulate product positioning and market strategies, and optimize inventory management and marketing strategies.

The Motivation and Goals of Using Python for Data Scraping

Python is the preferred language for data scraping due to its powerful libraries and simple syntax. Using Python, we can automate the process of scraping Amazon Best Sellers data for real-time analysis and decision support.

1. Why Scrape Amazon Best Sellers Data

Market Trend Analysis

By scraping Amazon Best Sellers data, we can identify trending products in the market and understand current consumer demands and preferences. This helps businesses adjust product strategies and predict market trends.

Competitor Monitoring

Monitoring competitors’ best-selling products and sales strategies helps businesses find opportunities and threats in the market and adjust their own marketing and sales strategies in time.

Product Positioning and Market Strategy Formulation

By analyzing best-selling product data, businesses can better position their own products and formulate more targeted market strategies to enhance market competitiveness.

2. Challenges in Scraping Amazon Best Sellers Data

Technical Challenges of Dynamic Content Loading

Amazon Best Sellers pages often use dynamic loading techniques, where data is not embedded directly in the HTML but loaded dynamically via JavaScript, posing a challenge for data scraping.

Circumventing Anti-Scraping Mechanisms

Amazon’s website has complex anti-scraping mechanisms, including IP bans and CAPTCHA verification. Effectively circumventing these mechanisms is a key issue in the data scraping process.

Ensuring Data Timeliness and Accuracy

To ensure data timeliness and accuracy, scrapers need to handle frequent data updates and changes while ensuring the stability and completeness of the scraped data.

3. Environment Setup and Tool Selection

Setting Up Python Environment

First, we need to set up the Python environment. It’s recommended to use Anaconda to manage the Python environment and dependencies, facilitating subsequent development and maintenance.

Installing Necessary Libraries: Selenium, Webdriver Manager, pandas, etc.

After setting up the Python environment, install the necessary libraries:

pip install selenium webdriver-manager pandas

- Selenium: Used for automating browser operations.

- Webdriver Manager: Simplifies the management of browser drivers.

- pandas: Used for data processing and analysis.

Development Tool Selection and Configuration

It is recommended to use VSCode or PyCharm as development tools. These tools offer powerful code editing and debugging features, improving development efficiency.

4. Web Scraping Basics: Understanding Amazon Website Structure

Analyzing Amazon Best Sellers Page

First, analyze the structure of the Amazon Best Sellers page to understand how data is loaded and the position of page elements. This can be done using the browser’s developer tools (F12).

Inspecting and Locating Page Elements

Using the developer tools, inspect the HTML structure of the page to find elements containing product information. These elements are usually marked by specific tags and attributes, such as product names in <span> tags and prices in <span class="price">.

5. Writing the Scraper Script

Initializing Webdriver

First, initialize the Webdriver:

from selenium import webdriver

from webdriver_manager.chrome import ChromeDriverManager

driver = webdriver.Chrome(ChromeDriverManager().install())

This code uses Webdriver Manager to automatically download and configure the Chrome driver.

Configuring and Launching the Browser

You can configure browser options, such as headless mode, to improve scraping efficiency:

options = webdriver.ChromeOptions()

options.add_argument('--headless')

driver = webdriver.Chrome(ChromeDriverManager().install(), options=options)

Navigating to the Target Page

Use Selenium to navigate to the Amazon Best Sellers page:

url = 'https://www.amazon.com/Best-Sellers/zgbs'

driver.get(url)

Using Selenium to Open Amazon Best Sellers Page

Ensure the page is fully loaded before scraping data:

import time

time.sleep(5) # Wait for the page to load completely

Parsing and Locating Elements

Use XPath or CSS selectors to locate product data:

products = driver.find_elements_by_xpath('//div[@class="zg-item-immersion"]')

6. Practical Data Scraping

Handling Dynamic Loading Content

For dynamically loaded content, use Selenium’s wait mechanism:

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

wait = WebDriverWait(driver, 10)

products = wait.until(EC.presence_of_all_elements_located((By.XPATH, '//div[@class="zg-item-immersion"]')))

Scraping Product Information

Iterate through the product list and extract names, prices, ratings, and sales data:

data = []

for product in products:

name = product.find_element_by_xpath('.//span[@class="p13n-sc-truncated"]').text

price = product.find_element_by_xpath('.//span[@class="p13n-sc-price"]').text

rating = product.find_element_by_xpath('.//span[@class="a-icon-alt"]').text

data.append({'name': name, 'price': price, 'rating': rating})

Handling Pagination and Infinite Scrolling

For pagination and infinite scrolling, write code to automate page flipping and scrolling:

while True:

try:

next_button = driver.find_element_by_xpath('//li[@class="a-last"]/a')

next_button.click()

time.sleep(5) # Wait for the new page to load

products = driver.find_elements_by_xpath('//div[@class="zg-item-immersion"]')

except:

break # No more pages, exit the loop

7. Data Storage and Processing

Using pandas to Process and Store Scraped Data

Convert the scraped data to a DataFrame and save it as a CSV file:

import pandas as pd

df = pd.DataFrame(data)

df.to_csv('amazon_best_sellers.csv', index=False)

Data Cleaning and Formatting

Clean and format the data for subsequent analysis:

df['price'] = df['price'].str.replace('$', '').astype(float)

df['rating'] = df['rating'].str.extract(r'(\d+\.\d+)').astype(float)

8. Precautions and Common Issues

Complying with the robots.txt Protocol

Before scraping data, check the target website’s robots.txt file to ensure that the scraping behavior complies with the site’s regulations.

Simulating Normal User Behavior to Avoid Detection

Simulate normal user behavior by adding random delays and switching User-Agent to reduce the risk of detection:

import random

time.sleep(random.uniform(2, 5)) # Random delay

Handling Exceptions and Errors

Include exception handling mechanisms in the scraper script to improve robustness:

try:

# Scraper code

except Exception as e:

print(f'Error: {e}')

driver.quit()

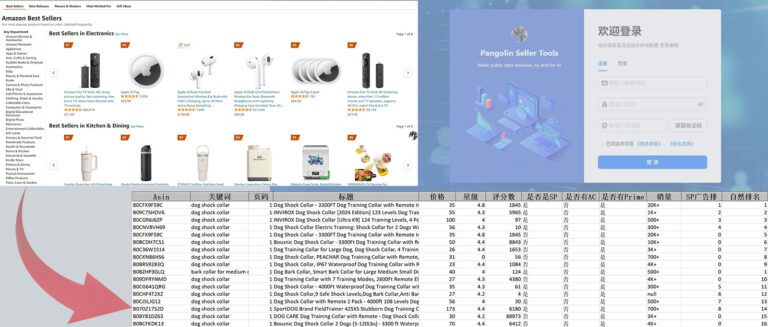

9. Case Study

Demonstrating Scraper Deployment and Operation with a Specific Case

Below is a detailed case study showcasing a complete scraper script, running tests, and validating its functionality and performance.

Complete Scraper Script

import time

import random

import pandas as pd

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from webdriver_manager.chrome import ChromeDriverManager

# Initialize WebDriver

options = webdriver.ChromeOptions()

options.add_argument('--headless')

driver = webdriver.Chrome(ChromeDriverManager().install(), options=options)

# Navigate to Amazon Best Sellers page

url = 'https://www.amazon.com/Best-Sellers/zgbs'

driver.get(url)

time.sleep(5) # Wait for the page to load completely

# Scrape data

data = []

while True:

wait = WebDriverWait(driver, 10)

products = wait.until(EC.presence_of_all_elements_located((By.XPATH, '//div[@class="zg-item-immersion"]')))

for product in products:

try:

name = product.find_element_by_xpath('.//span[@class="p13n-sc-truncated"]').text

except:

name = None

try:

price = product.find_element_by_xpath('.//span[@class="p13n-sc-price"]').text

except:

price = None

try:

rating = product.find_element_by_xpath('.//span[@class="a-icon-alt"]').text

except:

rating = None

data.append({'name': name, 'price': price, 'rating': rating})

try:

next_button = driver.find_element_by_xpath('//li[@class="a-last"]/a')

next_button.click()

time.sleep(random.uniform(2, 5)) # Random delay

except:

break # No more pages, exit the loop

# Save data

df = pd.DataFrame(data)

df.to_csv('amazon_best_sellers.csv', index=False)

# Clean data

df['price'] = df['price'].str.replace('$', '').astype(float)

df['rating'] = df['rating'].str.extract(r'(\d+\.\d+)').astype(float)

Analyzing Key Code and Implementation Logic in the Case Study

- Initializing WebDriver and Configuring the Browser: This part of the code initializes WebDriver and configures headless mode to avoid the browser interface affecting performance.

- Navigating to the Target Page and Waiting for Load: The script navigates to the Amazon Best Sellers page using

driver.get(url)and usestime.sleepto wait for the page to fully load. - Scraping Data: Using a

while Trueloop, the script scrapes data from all pages, processing each product’s information and storing it in thedatalist. - Handling Pagination and Random Delays: Pagination is handled by locating and clicking the “Next” button, and random delays are introduced using

random.uniformto simulate normal user behavior. - Saving and Cleaning Data: The scraped data is saved as a CSV file and cleaned using pandas for easier subsequent analysis.

10. Current State and Challenges of Scraping Amazon Data

Common Practices and Effectiveness in Current Data Scraping

Currently, commonly used tools and methods for data scraping include Selenium, Scrapy, and BeautifulSoup. Each has its pros and cons: Selenium is suitable for scraping dynamically loaded pages, while Scrapy and BeautifulSoup are better for extracting data from static pages.

Main Difficulties and Challenges Faced

Key difficulties and challenges in data scraping include:

- Anti-Scraping Mechanisms: Many websites use complex anti-scraping mechanisms like IP bans and CAPTCHA verification, which require solutions like proxy pools and user behavior simulation to circumvent.

- Complex Data Structures: Some pages have complex and variable data structures, requiring flexible parsing code.

- Real-Time Requirements: To ensure data timeliness, scrapers need to run frequently and update data promptly.

11. A Better Alternative: Pangolin Scrape API

Introducing the Features and Advantages of Pangolin Scrape API

Pangolin Scrape API is an efficient data scraping solution that offers capabilities such as avoiding maintenance of scrapers, proxies, and bypassing CAPTCHA, making data scraping more convenient and stable.

No Need to Maintain Scrapers, Proxies, and Bypass CAPTCHA

Using Pangolin Scrape API eliminates the need to manually maintain scraper scripts and proxy pools, and handle complex CAPTCHA, significantly simplifying the data scraping process.

Real-Time Data Retrieval and Ability to Scrape by Specific ZIP Codes

Pangolin Scrape API provides real-time data retrieval and the ability to scrape data by specific ZIP codes, further improving the precision and usefulness of the data. For example, it allows for more granular market analysis by obtaining product data for specific regions.

12. Summary

Summarizing the Steps and Key Points of Implementing Python Scraper

This article provides a detailed overview of the steps and key points in using a Python scraper to scrape Amazon Best Sellers data, including environment setup, writing scraper scripts, handling dynamic content, scraping product information, and data storage and processing.

Emphasizing the Advantages of Pangolin Scrape API as an Alternative

While Python scrapers are powerful in data scraping, Pangolin Scrape API offers a more efficient and stable alternative, simplifying the workflow of data scraping.

Providing Further Learning and Exploration Resources

Recommended further learning and exploration resources include Python scraping tutorials, data analysis tools, etc.: